As AI systems become embedded across business operations, the question isn’t whether they require governance, but how to implement it effectively. This is even more crucial now that we know that there will be no delay in the EU AI Act’s enforcement.

Meanwhile, you should already be deploying a proper AI governance framework. Many organisations already rely on strong compliance foundations like GDPR. However, AI introduces new layers of complexity and risk that require tailored governance, sharper accountability, and more rigorous oversight. There's simply no universal template.

You could structure your AI governance following the EU AI Act's timeline. Or consider the EU AI Act as the most stringent text, similar to the international standard that became the GDPR. However, the challenge is to move beyond general principles and design operational governance frameworks — not just because “the AI Act says so,” but because it’s essential for the sustainable & trusted use of AI.

A proper governance allows you to identify & navigate risks stemming from the use and development of AI systems. It is also a reputational and competitive advantage to be transparent & responsible when using AI. It can also help to lower compliance costs.

We recently hosted an AI Governance workshop at the CPDP.AI event in Brussels with around 30 participants mostly DPOs, privacy leads and compliance professionals. The results will be shared across this article althroughout.

Let's go through the most important AI Governance points.

We kicked things off by asking them to rate their own organisation’s AI compliance. The outcome? Most see themselves at the early stages of readiness for the EU AI Act. Many are just starting to bridge the gap between high-level obligations and daily operations.

Make it fit: Tailor governance to your organisation

Effective AI governance isn’t about replicating a rigid standard. The key is to ensure your governance is proportionate, practical, and firmly anchored in how your business actually works:

- Consider how to develop internal templates for AI-specific reviews that align with your existing vendor assessment processes.

- Define how your organisation will operationalise AI ethical principles just as you did under the GDPR : adopt the same mindset as privacy or security by design, integrate fairness, explainability, and robustness directly into the AI development lifecycle.

- Map your AI systems, whether developed in-house or sourced externally, by leveraging the same approach you used for your ROPA.

- Assess risks through your established risk evaluation steps, but with AI goggles.

- Clarify ownerhsip as already did for your stakeholders who know their roles.

Integrate, don't isolate

AI human governance shouldn’t live in its own silo.

It needs to reflect your organisation’s size, industry, risk appetite, and way of operating. Smaller companies might not have dedicated AI teams, and that’s fine. There are no obligations to create dedicated functions.

Many organisations weave AI oversight into their risk or ethics committees, or establish dedicated AI boards that work closely with privacy, audit, and security teams. For example, you might have the CTO handle technical development, the Chief Privacy Officer focus on data compliance, and the Chief Risk Officer oversee AI-specific risks.

In ay case, AI can’t be managed in isolation. It needs multi-department scrutiny, alignment with corporate values, enabling effective coordination between various departments and ensuring that diverse risks are properly understood and managed.

Elaborating a RACI can be an effective way to determine who is responsable for what.

During our discussions, we saw how diverse these approaches can be. In some companies, AI governance emerged from IT or data teams. In others, it started under privacy teams who had early visibility on regulatory risk. The decision points such as who sets thresholds for bias tolerance or who greenlights high-risk models, are still evolving. Many still lack clear processes to consistently involve all relevant teams.

In any case, there is no one-size-fits-all solution. The essential part is the cross-functional engagement, and there are different operating models depending on size & sector.

We are convinced that the Data Protection Officer (DPO) should be in charge of AI Compliance. In the vast majority of cases, artificial intelligence involves the processing of personal data. It is therefore logical that new data-related issues are fully integrated into the broader scope of the DPO's responsibilities.

Develop an AI strategy that goes beyond mere compliance

Effective governance is defined by how decisions are made, enforced, and supported across the company. Ask yourself:

Is AI governance in your company treated as a mere compliance task, or as a critical capability?

When ethics and commercial pressures collide, which takes priority?

AI governance must be more than static policies. It’s a living framework, shaped daily by choices that reflect your company’s values, whether it’s a CEO funding training despite budget concerns, a legal counsel questioning a risky but profitable use case, or engineers delaying a launch to address bias.

Establish a managerial-level AI strategy: a clear AI strategy is foundational for sound governance. It signals that AI isn’t treated as an isolated technical experiment or scattered set of projects, but as a strategic capability managed with purpose and oversight.

At the leadership level, and in order to avoid fragmented, inconsistent AI initiatives across the business, an AI strategy should define:

The overarching objectives for AI use,

The rules and parameters for deployment, clarifying where AI should or shouldn’t be applied,

And the financial and human resources allocated to make it work.

Guide operational decisions from the top down: once set at the managerial level, this strategic framework becomes the reference for all tactical and operational decisions — from project evaluations to risk assessments, post-deployment monitoring, and assigning responsibilities. It ensures that data scientists, product teams, compliance officers, and partners align with the organization’s values, legal duties, and risk appetite.

Integrate governance directly into strategic planning: Governance shouldn’t be an afterthought. It must be embedded into how AI projects are budgeted, prioritized, and measured, aligning with broader risk management and quality goals.

Reducing risk exposure through governance

Depending on the stage of the AI lifecycle and the role of each stakeholder, different risks may arise. Governance plays a crucial role in identifying, assessing, and implementing measures to address these risks. Those risks can pertain to matters such as:

- Regulatory (sanctions), reputational (loss of clients);

- Cyber attacks, biais or hallucinations, discrimination;

- Loss or leak of confidential data, intellectual property;

It’s important not to look at each risk in isolation but to consider them collectively, always keeping the purpose of the AI use case in mind.

- For example, an AI system used by HR to evaluate candidates poses very different risks than an AI tool that simply monitors daily public news.

Once the risks are identified, appropriate mitigation measures should be put in place — such as anonymising personal data, strengthening contractual clauses with providers and users, or scheduling regular audits.

If these measures prove insufficient to adequately reduce the risks, or if they fail to protect the rights and freedoms of individuals, it may be necessary to adopt even stricter safeguards. In some cases, this could ultimately mean deciding not to pursue a particular AI use case at all.

Mapping & connecting to data governance

Ensure AI systems are governed with full visibility into their data foundations. Link your data management quality and accountability practices to AI oversight.

Ensure AI systems are governed with full visibility into their data foundations. Link your data management quality and accountability practices to AI oversight.

Knowing the origin and method of data collection is essential, not only for legal compliance, but also for building trust & making AI systems more accountable. Holding unnecessary data increases the risk of breaches or misuse in AI systems.

To keep your compliance up-to-date, strong data quality workflows & controls are essential. Such as creating a questionnaire in order to identify and assess AI tools in light of the AI Act.

Creating a record of AI systems is an essential step here & will give you the necessary visibility.

Some organisations only have a loose list of tools. Others run internal surveys or change management tickets to spot new AI uses. A few incorporate these updates into their ROPA (GDPR record) and conduct yearly or on-demand reviews. Others hired someone to analyze all the use cases by contacting all departments & making a list of what they found.

Whatever your way of doing it is, you need to start mapping your AI systems somewhere, somehow. However, the success depends on workflows such as standardizing the information being collected, partially automating the collection process where possible & regularly updating the information to keep the AI mapping relevant.

We simplified it for you over here.

From vague promises to real policies

Establishing policies is essential to define your organisation's position on AI. It helps control the usage, moderate and reduce the risks of incidents.

- Set up clear internal rules for developing, procuring, testing, deploying and monitoring AI.

- Define how ethical principles (like fairness, accountability, transparency) are put into practice.

- Tools for recording the technical documentation of AI systems and the transparency documents provided by vendors.

- Tool for assessing the AI maturity of service providers or for scoring tenders.

- It also is essential to review the privacy policy, T&Cs and compliance documentation, as much as possible given the difficult access or negotiation, to consider aspects such as intellectual property and cybersecurity.

- Model contractual clauses to address AI-related risks.

Avoid vague promises in your policies like "we will prevent hallucinations or bias" and replace them with concrete requirements "undergo testing of X kind, under X number of days, and deployed one week after confirmation".

Think of policies like software, they need regular updates to stay relevant.

Beyond the AI Act's literacy obligation

While the AI Act (Article 4) references AI literacy, there are no direct fines tied to it. But regulators could well consider a lack of literacy as an aggravating factor in assessing violations of the law when it starts taking broader effect this August. Similar to the factor of lack of due diligence in dealing with bias for instance.

The living repository of the EU AI Office gives examples to support the implementation of literacy (but does not automatically grant presumption of compliance).

AI literacy goes far beyond checking boxes. It’s about building an organisation that truly understands why it uses AI and how to manage the risks and benefits. Regularly training teams and ensuring they use AI tools responsibly is key & cannot be a one-shot event.

Why? Let's take ShadowAI. It's your early signal of a gap between the speed of AI innovation & organization governance. Real world examples could take the shape of an internal security incident, similar to the one faced by Samsung when engineers leaked proprietary code by sharing it with ChatGPT.

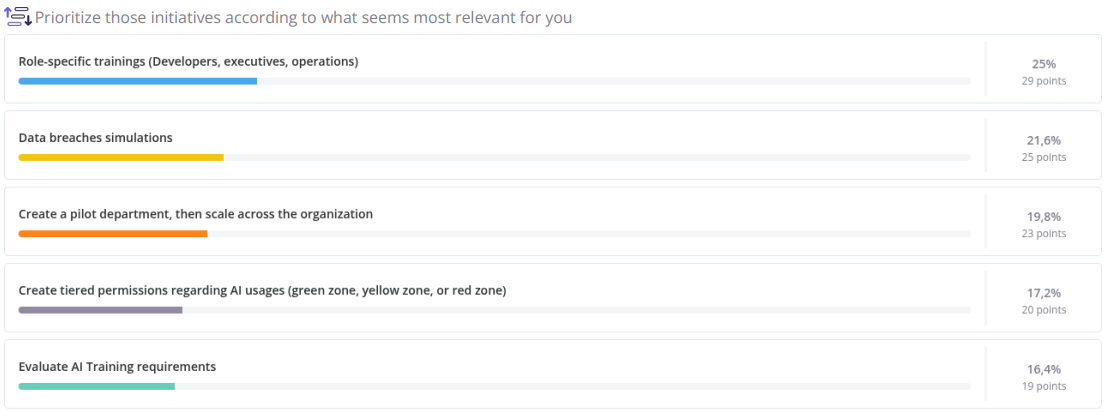

Our workshop made it clear: role-specific AI Training should be a top priority amongst literacy initiatives ranging from data breaches simulations, creating tiered permissions & more. From interns to executives, AI literacy has to become part of the culture.

The time for cautious observation is over

It’s time to embed AI Governance into your DNA, just like we learned to do with the GDPR.

Want to explore how to move from abstract obligations to concrete processes? Let’s talk here.

Meanwhile, check out our AI features over here.

Bottom line is: governance is alive & well. At least it is with Dastra's help!